We are lined up amongst the big players in Cyber Security. Ashburn Consulting is a vendor agnostic Consulting Company. We recommend solutions that satisfies customer requirements aligned with their budget.

Category: Security Engineering

Next Generation Firewall Overview with Glimpse of Application Identification

“I am not an advocate for frequent changes in laws and constitutions, but laws and institutions must go hand in hand with the progress of the human mind. As that becomes more developed, more enlightened, as new discoveries are made, new truths discovered and manners and opinions change, with the change of circumstances, institutions must advance also to keep pace with the times. We might as well require a man to wear still the coat which fitted him when a boy as a civilized society to remain ever under the regimen of their barbarous ancestors.”

-Excerpted from a letter from Thomas Jefferson , July 12, 1816

Interestingly, Thomas Jefferson’s quote could not be more appropriate when assessing todays state in IT Network Security. When people hear IT Network Security one of the first devices that come to mind is a firewall. Early firewalls started out as not much more than an extended access list. As security requirements grew Stateful packet inspect was introduced by Check Point Software to address several security issues with most notably being certain types of man in the middle attacks (MITM). As stateful inspection began to take off many network engineers found difficulties in implementation among some issues asymmetric routing became an issue that required engineers to control network paths with a fine tooth comb to confirm forward and reverse traffic were taking the same route back and forth or it would be dropped due to stateful inspection. Part of the reason for this introduction was to reduce the capability for a man in the middle attack to be intercepting traffic and sending it back without the end user being aware their network traffic had been compromised.

Fast forward back to the present and Next Generation Firewalls (NGFW) and layer 7 inspection are introducing a new evolution in IT security whose functionality is critical to todays’ IT security landscape. With many vendors introducing their own take on layer 7 inspection the importance of this new upgrade in capabilities is more apparent than ever akin to when stateful packet inspect was originally introduced. Most firewalls previously are port based in the sense that port 80 and 443 may be open outbound to allow web and ssl traffic.

With that knowledge a malicious user could exploit this port based firewall by running any application over these ports even though they were originally designed with the intent for web browsing and ssl traffic. A malicious user could run any application they desired over open ports without any restriction including unwanted chat and bit torrent clients up to and including applications like nmap without the restriction of being blocked by a firewall without another security applicance on the network capable of application inspection.

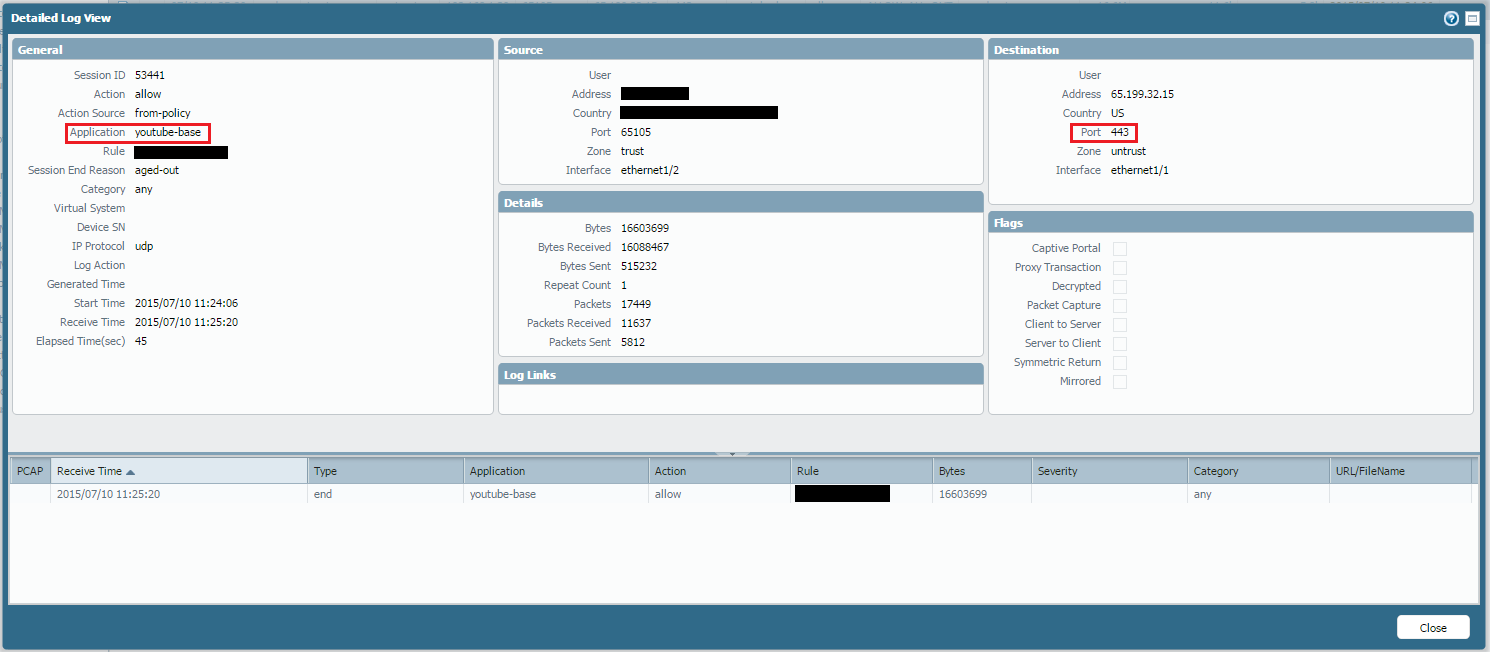

NGFW’s enable network security engineers to not only restrict ports but also applications and ports, they do this by inspecting IP packets for what data is inside the packet header beyond the traditional source/destination and port. This visibility into traffic is critical to enabling an engineer the capability to not only restrict port but also only allow web-browsing over port 80 or only ssl traffic over 443, and disabling any traffic that does not have web-browsing requests or ssl in the IP headers and blocking a malicious users ability to run other applications like torrents or nmap over that port or even save bandwidth by stopping applications like youtube google-hangouts, facetime, minecraft and other applications that may be unwanted on the network. In figure 1, in addition to source/destination you see a user viewing youtube over port 443 which is traditionally open, the functionality of this firewall will enable a Network Security Engineer to only allow ssl and web-browsing while blocking youtube. It is important to note that encrypted ssl traffic can be decrypted however that will be touch on in a separate article.

This new capacity requires specific configurations to implement correctly. During migrations many engineers lacking knowledge of why some traffic may stop working due to new application layer rules may cause openings in security to increase usability by removing some of the application layer configurations. This trade off is not using this new technology and functionality correctly and is effectively putting a child’s coat on an adult, it does not fit according to how the IT security landscape has evolved. Implementing this technology is critical to providing secure and trusted network traffic transactions and assist in disabling a malicious users ability to abuse legacy firewall functionality.

ICMP Security

This is a draft guide to handling ICMP securely.

Guide Analysis to Handling ICMP protocol

Summary:

This guide is an attempt to help answer common questions related to the handling of ICMP protocol in a secure and effective manner. Comments and feedback is always welcomed. This article is meant to cover the major area in which there may be questions on how to handle ICMP and what specifically should we allow in each particular condition which will also allow for effective risk mitigation. If you need specifics on ICMP codes with in each ICMP type please refer to the reference URLs below.

Major ICMP Protocol Types:

– 0: Echo Reply

– 3: Destination Unreachable

– 4: Source Quench

– 5: Redirect (change a route)

– 8: Echo Request

– 9: Router Advertisement

– 10: Router Solicitation

– 11: Time Exceeded for a Datagram

– 12: Parameter Problem on a Datagram

– 13: Timestamp Request

– 14: Timestamp Reply

– 17: Address Mask Request

– 18: Address Mask Reply

Areas of Affect:

Perimeter

Outbound: Echo Reply (0), Echo Request (8) (For Troubleshooting)

Deny Type: All except (TTL Exceed (11) & (Type 3, Code 4) From Limited External Testing Devices.

Interior (Corporate Network)

Internal Deny: Should be handled on a case by case basis, however when permissible squelch Redirect (5), Router Advertisement (9), Router Solicitation (10), Timestamp Request (13), Timestamp Reply (14). Address Mask Request (17), and Address Mask Reply (18). The usefulness of the ICMP message types are deprecated by DHCP and NTP.

Internal Allow: Echo Reply (0), Destination Unreachable (3 Code 4), Echo Request (8), Time Exceeded (11)

Remote Access & Site to Site VPN

VPN Allow: Echo Reply (0), Destination Unreachable (3, Code 4), and Echo Request (8).

VPN Deny: Everything Else

Intranet to Intranet / Partner to Partner

Intranet to Intranet Allow: Echo Reply (0), Destination Unreachable (3 Code 4), Echo Request (8), Time Exceeded (11)

Intranet to Intranet Deny: Everything Else

References:

PMTU

http://www.tcpipguide.com/free/t_IPDatagramSizetheMaximumTransmissionUnitMTUandFrag-4.htm

ICMP

http://www.tcpipguide.com/free/t_ICMPv4TimestampRequestandTimestampReplyMessages-3.htm

University of Syracuse ICMP Lecture Notes

INC 5000 list of fast growing Companies

[frame style=”modern” image_path=”http://www.simplesignal.com/blog/images/2013/08/inc-5000.jpg” link_to_page=”” target=”” float=”” lightbox=”#” lightbox_group=”” size=”three_col_large”]

Ashburn Consulting has made the Inc. 5000 list of the fast growing companies in the nation! This is a tremendous accomplishment and I couldn’t be any more proud of each and every one of you that has made this a reality! We thank all of our dedicated and talented employees for their commitment to excellence to make AC what it has become today! Grateful, Jim Burris

http://www.inc.com/profile/ashburn-consulting